Also known as a really good song by Chris Rea. Also known as, ladies and gentlemen, I am terrified. What will become of Linux in 2017? Will it even boot? Now, now, please, relax. I am not trying to be an attention person of fiscally questionably nature. I am just trying to share my fears with you.

And I’m also a man of science. Of numbers. The way I move through space with minimum waste and maximum joy is all about mathematical probabilities. I look at things happening around me and try to extrapolate what they will be like in the future. I seek patterns in the numbers, and what I see ain’t pretty. Linux is slowly killing itself.

Why so negative?

I am not being negative. Not at all. I am merely being realistic. Looking at the state of the Linux desktop in the past 6-7 years, I can see a definite negative trend in terms of quality. Overall, it has held steady for a good solid four years, around 2010-2014, and then things have sort of started to slip. Let me demonstrate. To wit, my Lenovo G50 test box, a very Linux unfriendly system.

In 2015

When I purchased this system, it gave me all sorts of grief. The chief amongst them was the wireless network card connectivity. But then, I found a workaround, and I was able to start using my laptop without having to worry about frequent losses of network. There were several other issues, like UEFI support, which was also gradually resolved, as more distributions started implementing mechanism to boot this state-of-the-art technology first launched in 2001.

I was hopeful that the glitches and problems would be gradually fixed upstream, and that newer editions of various distributions would emerge with a more streamlined hardware support, allowing me to use the laptop without worrying about seemingly trivial, given factors that any normal user would expect from their system. Alas, come the new year, things started turning for the worse.

Now, in 2016 …

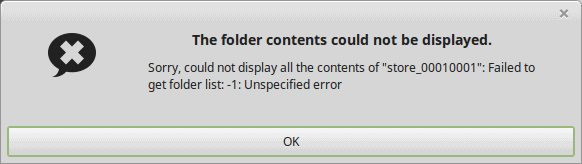

What did I have in 2015? On this particular system, I was able to use Samba shares with anonymous or guest access. I was able to use the Realtek card without network drops after adding the modprobe.conf change. I was able to play multimedia files, battery life was decent, smartphone support was okay, and prospects were optimistic.

In 2016, with all recent distros using a more recent kernel (post CentOS 7 and Trusty release), the network connectivity drops even with the modprobe tweak in place. Heavy network usage leads to an outage, which somewhat luckily can be resolved without reboots. Previously, my testing would usually have a single incident per live testing session and none in the installed systems. Now, a typical live session has 3-4 drops, whereas in the installed system, it happens whenever I’m downloading multiple files from the Web.

On the multimedia front, things are not looking bright. Linux Mint recently decided to stop providing codecs by default, adopting the Ubuntu method, which eliminates one of its chief advantages. Samba sharing is no longer possible anonymously. You need to provide credentials for your Windows machines to be able to connect to shares.

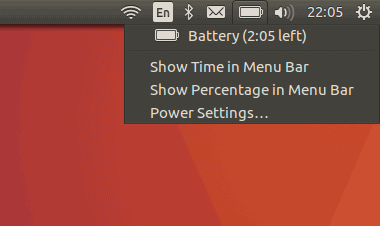

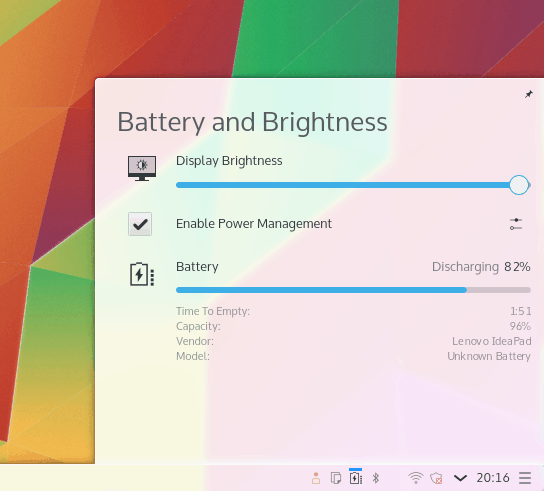

Battery life, on the SAME hardware, has gone down from roughly 3.5 hours to about 2.5 hours with pretty much every single distribution, and the results are consistent across different systems and desktop environments.

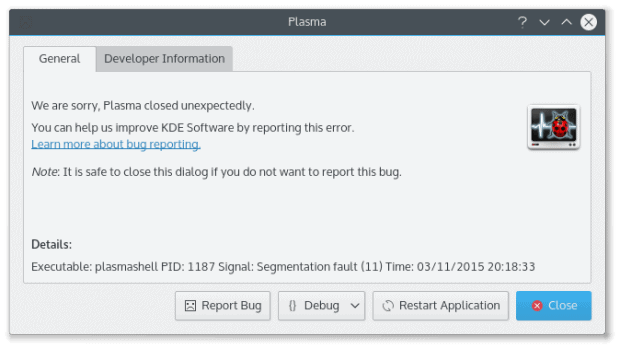

When it comes to smartphones, Plasma — a brand new and shiny desktop environment — fails to mount iPhone or Windows Phone in 2016. Nothing highlights the problem better than a comparison of the CentOS 7.2 KDE and Gnome editions. A seemingly outdated non-home-friendly system has such a drastic difference in quality and results, just because of the desktop choice. Then, Plasma came around, replaced and superseded KDE, and still, the same issues with smartphone (MTP) support are present and carried over into future releases.

Linux Mint 18 Sarah delivers an abysmal performance with smartphones — ALL of them. Four or five previous releases had excellent support in this space, with the very same Cinnamon release. On top of this, we have the remainder of regressions introduced in the Ubuntu 16.04, an LTS edition no less!

Lucid was the breakthrough Ubuntu release, followed by a rock-solid Pangolin and a supreme Trusty, which still remains the most balanced distro on the market out there. Xerus, on the other hand, struggles with lots of bugs and issues, both on the software and hardware front. For instance, the fact the new Software Manager was unable to locate some of the applications that can be searched and installed using the command line interface. That’s not the kind of bug that should be allowed into production.

The regression plague pretty much every distro. OpenSUSE 42.1 was another highly sought, highly anticipated release that ended up drowning in bugs and issues, none of which have been resolved half a year down the road. Usually, when I’d retest a particular distro, roughly two months after the initial launch, as I’ve done with Kubuntu and openSUSE on many occasions, the issues would be ironed out. Not this time.

I’m also having more and more distributions fail the basic boot test, either from USB or DVD, and I have launched a series of so-called rejection reports highlighting my frustration with the ordeal. Again, it is very important to be consistent, which is why the use of the same hardware and same testing methods helps highlight the differences and the drift between operating system versions over time.

Do the math, and project the results for 2017.

Why so buggy?

This is a really good question. And I think the answer has two parts. One, old problems are rarely if ever fixed, especially hardware-related problems. My woes with the N-band Intel card on the T400 laptop remained to the last day I still had this machine, and they were never resolved in any one distribution. The same applies to my Broadcom adapter on the HP Pavilion system, which would behave pretty badly across the wider distro estate for good many years, especially with systems installed to an external disk.

The other part is, the distroscape is accelerating. There are more and more changes being added to the desktop, and as a whole, the desktop is becoming more complex. The typical cycle of about six months might have been sufficient to review and QA the distributions, but it no longer applies for the modern crop of systems. I believe that it has become too difficult to keep pace with the rate of change and provide high-quality results.

Limited testing definitely entails the risk of bias, but I believe my statistical pool is fairly large and consistent. With hundreds of distros tested over many years across a range of devices, including no less than seven notebooks, it is fair to assume that my experience is representative of what Linux has become, and where it is headed.

Yes, it is friendlier and more convenient than before. There is no reason to pine after the good ole days. That is the illusion of time and warped memories. Linux has become more accessible, but at the same time, it is now being used and tested on a much bigger scale than ever before, and more problems are being found. It’s a good sign, but not so when people expect the corrective part of the feedback loop.

The distro devs have always waited for the golden moment when their systems would finally become a mainstream commodity. Android and Steam have definitely changed the perception out there, and now, the demand outruns the supply. Worse, the dwindling resources and outdated methods are causing havoc and irreparable damage to the Linux reputation. The pace is too fast, too wild, and our favorite operating system is unravelling.

Stop. Collaborate and listen.

If things continue the way there are, Linux will just become irrelevant. No one needs a Microsoft-alternative operating system if it can’t do half the things that a typical Windows system can. We’re not talking about miracles. Basic expectations. Connectivity. Consistency.

Consistency is just as important, if not more. Imagine if you have a production setup with one version of Linux. And now you upgrade to a new one. Can you afford to have things break? Think about your network setup. Or your smartphones. What if you can no longer browse the Web or download stuff without drops, and you cannot connect your gadgets to your laptop? That is devastating. Do you want to gamble your important stuff, your work, on whimsical, erratic changes in software?

Yes, things break everywhere! But not so rapidly and so randomly. Again, if we look at my G50 system, as good or as bad as it may be, my ability to perform the exact SAME operations I did a year ago has diminished significantly. I no longer can enjoy seamless Samba sharing, I cannot use my phones the way I used to download photos and sync music, I can’t use my laptop without frequent battery recharging, installing software is buggier and more difficult, and my network drops every 15 minutes.

That is all you need to know when you consider your odds for 2017.

The bigger question is, can Linux be saved?

The answer is, of course, it’s just software.

The answer is simple. Slow down. That’s all it takes. Having more and more git commits in the kernel is all nice and fun, but ultimately, at the end of the day, people just want to be entertained. They want things to be as they were yesterday. Progress has to be seamless. For most users, the huge advancements in kernel security and architecture are completely irrelevant, and go completely unnoticed. But userland bugs are terrible. And noticeable.

I think the distro world needs to gear down a notch or two. Bi-annual releases contribute nothing to the quality of the end product and detract people from focusing on delivering high-quality, robust products. It’s just noise for the sake of noise — generating activity the likes of the Civil Service in Yes, Prime Minister. No one will get a medal for releasing their distro twice a year. But people may actually appreciate solid products, as infrequently as they come, because at the end of the day, it makes no difference. Most people are happy to replace their software come the end of life of their hardware. And that means once every six years.

We don’t need to be so conservative. But let’s trying slowing down to one release a year. That gives everyone twice as much time to focus on fixing problems and creating beautiful, elegant distributions with the passion and love they have, and the passion and love and loyalty that their users deserve. Free does not mean you can toss the emotions down the bin.

Conclusion

The Year of Linux is the year that you look at your distribution, compare to the year before, and you have that sense of stability, the knowledge that no matter what you do, you can rely on your operating system. Which is definitely not the case today. If anything, the issues are worsening and multiplying. You don’t need a degree in math to see the problem.

I find the lack of consistency to be the public enemy no. 1 in the open-source world. In the long run, it will be the one deciding factor that will determine the success of Linux. Sure, applications, but if the operating system is not transparent, people will not choose it. They will seek simpler, possibly less glamorous, but ultimately more stable solutions, because no one wants to install a patch and dread what will happen after a reboot. It’s very PTSD. And we know Linux can do better than that. We’ve seen it. Not that long ago. That’s all.

Cover image by Brigitte Werner for Pixabay.com.

[sharedaddy]

There are no words to convey how refreshing it is to see an honest appraisal of this problem, particularly when you’ve got, on the other hand, examples of the other extreme of thinking, such as the absolute idiot at ZDNet proclaiming “Linux Mint 18: The best desktop–period”.

That’s the title of the article, folks, from a supposed ZDNet Linux expert.

Looks like ZDNet’s expert didn’t read Dedoimedo’s latest unbiased appraisal, “Mint 18 – Forgetting Sarah Linux”, of just seven days ago.

I agree (without the value judgement) regarding the ZDNet article; however, I don’t consider SJVN, or AK as Linux “experts” on that site. To my mind, the “expert” is J.A.Watson. His articles are less sycophantic, and contain more solid, useful information, IMHO.

My mistake–

I meant that the author (I never mentioned a name) CONSIDERS himself a Linux expert; just read his ‘credentials’.

And you are absolutely correct; the only person on ZDNet who puts in anywhere near the same amount of hard work as Dedoimedo into researching a subject BEFORE WRITING A WORD is JA Watson.

“To my mind, the “expert” is J.A.Watson…”

And yet J.A Watson still believes that the MATE and Cinnamon desktop environments are still “shells” (forks of “gnome-shell” that still require Gnome 3 to be installed) in his latest review of Linux Mint 18 MATE and Cinnamon releases which couldn’t be farther from the truth. Cinnamon become a true desktop environment when version 2.0 was released (October, 2013) and Gnome 3 was (and still is) no longer required to be installed, while the MATE desktop environment was a direct fork of Gnome 3.2 and had nothing to do with Gnome 3 in the first place.

It seems to me that the “experts” are rapidly becoming less expert.

And I’m also a man of science. Of numbers. The way I move through space with minimum waste and maximum joy is all about mathematical probabilities. I look at things happening around me and try to extrapolate what they will be like in the future. I seek patterns in the numbers, and what I see ain’t pretty. Linux is slowly killing itself.

So because they have a different opinion then you do makes them an idiot?

That’s all that article is. Just one persons opinion.

I happen to disagree with them. LinuxMint 18 is NOT “the best desktop–period”. I think that Gecko Linux with the Mate desktop is.

But hey, that’s just my opinion. Based on 20 years of using Linux. And Windows (DOS). Oh and Apple OS, OS2, and Unix and ….

Which I would guess is different than yours. So does that make you an idiot?

” Dedoimedo’s latest unbiased appraisal ” …

LOL. Unbiased. Yea right. Just like your opinion is “unbiased”? Give me a break. Everyone’s opinion is biased. EVERYONE’s!

No. What makes for an idiot is reputation. In this particular case, reputation precedes the individual, with “articles” such as ‘Chromebooks are taking over taking over the world’ spewed out in some cases at the rate of two and three ‘articles’ per day.

You are obviously one of those individuals for whom facts are an inconvenient nuisance.

which was stupid….. ohohohooo my codecs… whereere are my codecs…..

grow up a pair!

Let me say up front – I respect Dedo’s professionalism and scientific bent. He also writes with wit and charm. That being said –

I’m not having the kinds of problems that Dedo seems to be having. Different hardware, different expectations. My current setup:

System: Host: coyote-MJCinn Kernel: 4.4.13-1-MANJARO x86_64 (64 bit gcc: 6.1.1)

Desktop: Cinnamon 3.0.5 (Gtk 3.20.6) Distro: Manjaro Linux

Machine: System: Acer (portable) product: Aspire V5-561P v: V2.17

Mobo: Acer model: VA50_HW v: V2.17

Bios: Insyde v: V2.17 date: 09/02/2014

Battery BAT1: charge: 21.6 Wh 100.0% condition: 21.6/25.0 Wh (86%)

model: SANYO AL12A32 status: Charging

CPU: Dual core Intel Core i5-4200U (-HT-MCP-) cache: 3072 KB

flags: (lm nx sse sse2 sse3 sse4_1 sse4_2 ssse3 vmx) bmips: 9180

clock speeds: max: 2600 MHz 1: 2289 MHz 2: 2300 MHz 3: 2291 MHz

4: 2300 MHz

Graphics: Card: Intel Haswell-ULT Integrated Graphics Controller

bus-ID: 00:02.0

Display Server: X.org 1.17.4 driver: intel

tty size: 80×24 Advanced Data: N/A for root

Audio: Card-1 Intel 8 Series HD Audio Controller

driver: snd_hda_intel bus-ID: 00:1b.0

Card-2 Intel Haswell-ULT HD Audio Controller

driver: snd_hda_intel bus-ID: 00:03.0

Sound: Advanced Linux Sound Architecture v: k4.4.13-1-MANJARO

Network: Card-1: Broadcom NetXtreme BCM57786 Gigabit Ethernet PCIe

driver: tg3 v: 3.137 bus-ID: 01:00.0

IF: enp1s0f0 state: up speed: 1000 Mbps duplex: full mac:

Card-2: Qualcomm Atheros AR9462 Wireless Network Adapter

driver: ath9k bus-ID: 02:00.0

IF: wlp2s0 state: up mac:

Drives: HDD Total Size: 500.1GB (22.9% used)

ID-1: /dev/sda model: Samsung_SSD_840 size: 500.1GB

Partition: ID-1: / size: 19G used: 7.9G (45%) fs: ext4 dev: /dev/sda5

ID-2: /home size: 188G used: 77G (44%) fs: ext4 dev: /dev/sda2

ID-3: swap-1 size: 24.59GB used: 0.00GB (0%) fs: swap dev: /dev/sda3

Sensors: None detected – is lm-sensors installed and configured?

Info: Processes: 162 Uptime: 22:03 Memory: 1750.4/15932.8MB

Init: systemd Gcc sys: 6.1.1

Client: Shell (bash 4.3.421) inxi: 2.3.0

I run Manjaro Cinnamon, LM17.3 Cinnamon and KDE, and Windows 10. All work without major issues.

I connect my smartphone (Nexus 6P) without issue.

If anything, Dedo’s fine posts have steered me away from Realtek network cards, and iPhones/Ubuntu phones for use with Linux.

Your laptop sucks, man… buy another one.

EXACTLY! and stop bitching you twat !!!

The quality of your comment reflects the void inside your skull. 😛

You need to improve your scientific method abilities. Comparison is one of the key elements, so if some distros work and some don’t, and the behavior changes over time, it’s the DISTROS that suck and not the laptop.

Dedoimedo

Ditto. I switched to Xubuntu during Vivid and it was as good as you said in your review. Took me a while to learn my way around as a newbie, but it was a pleasure. I was telling people that switching to Linux is great. Two updates later, some stuff I used to have is missing. OK I’m not a total newb any more, I found how to fix it, I’ll keep on using it as I still like it more than most other options and can’t afford to reinstall, but I can’t, with a clear conscience, recommend it to a beginner. Look what I got yesterday after a reboot – I mean, this is the desktop, a LTS release, the first thing that should work is showing text under the icons properly (this is a Manjaro theme, btw), how is it suddenly looking like this?

Btw, I shared your Xubuntu Xenial review to the Xubuntu group on Facebook and a few agreed that, basically, it’s “brutal but true”.

Ditto. I switched to Xubuntu during Vivid and it was as good as you said in your review. Took me a while to learn my way around as a newbie, but it was a pleasure. I was telling people that switching to Linux is great. Two updates later, some stuff I used to have is missing. OK I’m not a total newb any more, I found how to fix it, I’ll keep on using it as I still like it more than most other options and can’t afford to reinstall, but I can’t, with a clear conscience, recommend it to a beginner. Look what I got yesterday after a reboot – I mean, this is the desktop, an LTS release, the first thing that should work is showing text under the icons properly (this is a Manjaro theme, btw), how is it suddenly looking like this?

Btw, I shared your Xubuntu Xenial review to the Xubuntu group on Facebook and a few agreed that, basically, it’s “brutal but true”.

Dedoimedo. As I posted above, Windows and Intel have been changing things, partly as a result of security and partly to maintain a proprietary lock in for Microsoft’s software. So I don’t think it is purely a developer problem by any means. Linux specific laptops work fine. I think that the Linux developer community will adapt to the substantial hardware changes in the couple of years. As of now buying a Windows laptop will involve some tweaking.

Sample size is to small to provide any real VALID conclusion. Your method is flawed. You application of the method is flawed, Therefore you conclusion is flawed.

Get it?

So you would change your wife if she argues with you in some(not all) matters?

If i was married to a laptop… yes! 🙂

That’s not a fair comparison. The laptop is heavy and the battery life sucks (3.5 hours is piss poor as my Chromebook gets 10.5 hours in Linux and 8.5 hours in ChromeOS). Plus it’s a laptop, not a human being.

As usual, blame Linux for not fixing driver problems. As has been done for years. When in fact drivers are provided by the maker of the hardware, NOT Linux. And people don’t seem to be able to understand that.

When was the last time you tried to do a “bare metal” install on Windows (7, 8, 8.1 or even 10)? I tried to install a larger HDD on a 1 year old Dell desktop. The install went fine. Except for the 1GB of drivers that were needed. The were no drivers for the wireless NIC installed. Had to down load them on my Linux box, copy them onto a thumb drive and install from there. Windows freaking 10 FFS! Is this a Windows problem? Looking at it from your point of view, YES IT IS!!! But in reality, no.

Now on that same hardware I fist installed LinuxMint Debian (Betsy). Everything worked. No problems, no workarounds. Out of the freaking box Linux worked. Then I moved to Gecko Linux, a spin of openSuse Tumbleweed. Damn. It too worked perfectly out of the box. Antergos Linux, an Arch derivative? Same thing. All of them the CURRENT versions. One of them was even a Beta. Every distro, every piece of hardware detected, all of it worked.

Windows 10? How about Windows 7? Not so much. Windows 8 or 8.1? Not even worth the effort to try. ! extra freaking gig of drivers needed. I mean really? REALLY?

Now is this just on a single computer? Nope! Same results on more then a hand full of completely different hardware. Hardware from as many different hardware companies. Including one that I built from scratch myself. One as new as a year old, and one as many as 10 years old. All of them with no problem. No work around or or downloading of drivers needed.

I have been using computers for many, many years. I can/do build and repair them. I cam write code in Basic, Pascal, Fortran, Cobol and HTML. I have have had more computers then I can count. More different types of CPUs then most know exhist. So I guess that I can say that I have a fair idea of how hardware and software work.

There were some problems in the earily years of Linux. Ok, lots of problems.YES THERE WERE! Some of them I never did get fixed/working. But compared to Windows? The last version of Windows that I had installed for any amount of time was Windows 7, that for a couple of years. Windows 8? Or 8.1? Would rather use Vista. Windows 10? Tried it for 3 months. God how I tried. Preinstalled it was painful. On bare metal, it was a disaster.

Windows may have a choke hold on the desktop market. But anywhere else. It is a distant second. It is second to what you may ask? Second to Linux. Android (based on the Linux kernel) is the 800 lb Gorilla in the moble market. Phones and tablets. It runs an the vast majority of the internet. Take a look at the top 500 super computers in the world (for the last 10+ years). It’s used in at least 3 of the worlds largest Stock markets. The U.S. Navy uses it. It’s on the planet Mars ( NASA uses it)!!!!

Not to bad for something that doesn’t work that well and is in trouble I’d say.

Is the Linux desktop perfect? Maybe, maybe not. But it is close enough that it’s all I use. Everyday, for everything. I even play games on it. I don’t use ANY Windows, on ANY of the handful of computers I have/use. Not even on the several that I don’t use that are just laying around. And I do everything from social media, to e-mail, to general web browsing. I even use Linux for video production and to live stream. All of it. Everyday. For me it just works.

You’re having problems with running Linux? I guess so, buy the sound of it. But is it Linux’s fault? Maybe. But my guess is that it’s not. It maybe that it just won’t work on your hardware. Then again, maybe it will, I don’t know. Windows doesn’t work every time, out of the box on all hardware either.

But to blame Linux for all the problems that you are having …. I just don’t buy it. Drivers/hardware issues, for the most part, on Linux, are not Linux problems. Put the blame where it belongs. Squarely on the shoulders of the makers of the hardware.

Is Linux for everyone? Well no. But you can’t blame Linux for that. The fact is, neither is Windows.

You want hardware that will run Linux? You like Linux that much? Well try one of the computer makers that sell them with Linux pre-installed, there are many of them. System 76 and Dell, for starters, sell them. Don’t like the the pre-installed distro? Then change it to which ever one that you do want. If it works with the pre-installed one, chances are very, very good that just about any one of the 100’s of other distros will too.

System 76 makes absolutely outstanding machines. They spec 32GB of Ram up to 64GB and a good CPU, Graphics and hard drive. They are like high end Windows gaming or work station machines. Not cheap, but I’m pretty sure they will work out of the box. I suspect Dell will too, but I haven’t checked their hardware. I think the choice is to buy a Linux box or buy a Windows box and be prepared to do some tweaking.

I, like Georgezila, don’t put the blame mostly on Linux developers. Windows and Intel are changing their hardware, perhaps in part to kill or impede Linux. There was a real fear that after Linux was adopted in the Cloud, on Servers and on Smartphones, that the “disease” could spread to the desktop and infect Microsoft’s cash cow, Office. There have been a lot of changes in Wintel during the past few years. Some of them for security, some of them for proprietary lock in.

Drivers SHOULD be provided by the makers of hardware, unfortunately this is not always the case, or the drivers are of poor quality. If anything GNU / Linux developers deserve an immense amount of credit for the work they’ve done reverse engineering hardware and developing open source drivers. I’m writing this comment on a Macbook of all devices and despite it not being particularly Linux friendly everything works (mostly). In my particular case the open source nouveau driver works better than Nvidia’s proprietary driver in everyday non-gaming tasks (it lets me control my displays backlight brightness which is completely broken with the proprietary Nvidia driver and searches reveal I’m not the only one with this issue).

You forgot the word: DESKTOP.

Servers have NOTHING to do with the desktop experience.

Dedoimedo

When you go and view any sports event, in which part you concentrate? The actual game itself or the ground its getting played on?

OS is just like a ground where games are like software which made to do specific tasks to perform.

If I have to spend most of my time concentrating on the ground instead of the game, then I wouldn’t even go and watch the game. It is pointless.

Have you ever watched any sports event where the ground needs to be repaired or fixed every time a game begins?

Baseball between innings.

me one of the end user with average skills and who has been working for top level government sector.. your point of “poor consistency in linux distro ” is perfectly true.. In my experience, centos 6 is the only distro who is working flawlessly without having single problem. i.e. but after the gnome 3 era, ( i still searching the guy who designed and developed gnome 3 ) problems were started pushed me to go back to windows. Quality is totally missing in linux distro and lots new problems aroused.. Developers should think/work by keeping end user on their mind.. like mint team is doing at this moment,. Only Quality gives Success.. not new design, icons , themes, new functionalities, blah blah,, etc..

instal win10/vista10 and STFU!

whenever i mentioned windows its should be construed as Win7 not as win10.

I’ve read all your articles but I don’t get it. I absolutely love Manjaro KDE. 🙂

You don’t get what? How’s your love for Manjaro related to the overall poor and deteriorating quality of distros?

Dedoimedo

Who cares if the *overall* quality is poor or detoriorating? All we need is that one distro that perfectly suits our needs, right? You seemed to really like Ubuntu 12.04 and CentOS 7 GNOME so why not just use those and be happy? Focus on the good and forget about the bad stuff? I test new or “updated” distro’s now and then but if they suck, I forget about them just as easily.

> Focus on the good and forget about the bad stuff?

Because the bad stuff is breaking daily usage…

That’s not the point… also not to mention that Ubuntu 12.04 is 4 years old now and will be unsupported next year. The point is that for his hardware, Linux has been progressing worse when you would think it would be advancing its support. When you want to use the latest distro and you can’t because of a buggy desktop shell or driver regressions, it doesn’t really look very good for Linux when even Windows has better backward compatibility with hardware.

Windows Phone support in Dolphin has been fixed around six months ago. It all comes dows to distros sucking at providing recent packages.

I don’t care. I use the end product and it sucks. As simple as that.

Dedoimedo

That’s an interesting thinking from a foss user. I hope you will have more luck with stuff working next time.

I’m not a fan of regressions either.

Because the average user isn’t going to manually download and compile the updated version. They’re going to look to their package manager, which handles dependencies as well.

Let’s not jump into compiling territory just yet. There are backports for major distros.

@Dedoimedo:disqus – I’ve followed you and your articles for a long time now and always found them enjoyable reads however, this article here sounds pretty much like, “I use the end products, some don’t work “out of the box” on my machine therefor they suck”. Believe it or not, I write that with all respect, I just don’t agree at all with what you’re saying this time around.

It just seems odd that in one of your latest articles about CentOS (at dedoimedo [dot] com), you seem to go through quite a lot of terminal work just to get the thing to work the way you want it too and almost without a single complaint yet when Linux Mint 18, for example, doesn’t work just the way you want “out of the box” or during the live session, you say it sucks, it’s all doom and gloom, without a single work around on your part.

Samba was removed from Mint 18. It was announced in the “What’s New for 18” post (as well as the 18 beta announcement posts) so there was plenty of heads up.

The codecs were also removed from the live session and installation routine but Mint provided 3 different, easy, simple (GUI) ways to install them. This was also announced in the same “What’s New…” post, etc.

Now one thing I didn’t agree with concerning the removal of samba is that Mint did not provide a simple, easy way for the user to install samba and all it’s dependencies aka the way they did with the codecs. I even left a comment saying so on the announcement post for 18.

Realtek network hardware is notorious for not working with Linux distros and fault is not the Linux distro but rather with Realtek’s lousy Linux drivers and it’s not up to Linux distro developers to fix this even though they’ve been known to try like hell.

Anyway, as I said; wrote with all respect. Just having conversation.

@kmb42vt:disqus

Shouldn’t a product be completely ready before it gets released? I believe the hardware compatibility should be the first priority for any distro develppers. If you cannot install or having trouble to install any OS be it Windows or OS X or Linux, there is no point fixing or testing that piece of software. Hardware compatibility is the basic building block of the software ecosystem, just like without skeleton a human body would collapse. It does not matter how strong the other parts of our body are.

I agree, ideally a product should be ready before it gets released however, when it comes to software of any type, especially operating systems, they rarely are. And I’ve tested and worked with more OSs than I can recall (yeah, I’m an old “tech-head”) from main frames to desktop OSs. That’s why there’s always things like “service packs”, “point releases” and simple updates that contain bug fixes, etc after every new release of an OS.

The point is that it is simply not possibly for desktop operating system developers to foresee every-single-problem that might occur for a new release. There’s hundreds of thousands of different hardware setups both old and new, millions of users of every level of experience imaginable. Hundred’s of different drivers for all sorts of hardware and peripherals, and the development of the majority of these drivers are simply out of the hands of the OS developers. Windows or Linux distributions, there are always going to be machines that experience problems.

As much as enjoy reading Dedoimedo’s articles and posts, this “one person, one (troublesome) machine” type of testing hardly indicates that desktop Linux sucks.

Again, it goes back to scientific method. The machine is irrelevant. It’s the change, the erratic change, on the machine that is the problem. I test more thoroughly than most, on more hardware types than most. Furthermore, as I’ve already written several times – Samba, smartphones, codecs have nothing to do with hardware. That’s just pure amateurism. And then, battery life, also hardware independent, because MX-15 and Windows 10 offer excellent results on the same hardware. And so forth.

The change. The lack of consistency. That is the problem. But people will keep deflecting with comments like laptop, Dedoimedo, etc. No. It’s the product that sucks. As simple as that. Horrible rushed releases that are a disgrace to the entire ecosystem.

But you need to understand this piece – how proper experiments are done:

A + B = R1

A + C = R2

Ergo, A is a zero operator.

Hardware is completely irrelevant here.

And I’m not asking for much. I’m asking for just the stuff that 7 billion people expect on their system when they turn it on – some stability, music, videos, ability to download photos off their phones, decent battery life. The basics. None of that is a given in 99% of distros. Moreover, whatever result you have today CHANGES tomorrow. That’s at the heart of this sad saga. The lack of consistency, the unpredictability, the half-arsed QA.

Dedoimedo

To be fair, most people working on desktop Linux are Amateurs.

You have used this same BS reasoning many times. And it’s still BS. You samples of hardware and distros are to small and therefore your results are in no way accurate. And you would know this if you really knew jackshit about “scientific method” or statistics.

All you are offering is an opinion based on a small sampling. Period.

I have highlighted only a selection of examples. It does have more than 20 measurement points, so we’re okay with the whole 95% confidence thingie. Moreover, most of the problems are not related to hardware, but to inconsistency in software quality and results between one release and another as well as among different distros. Now, since we’re talking about science and statistics, how about you reveal your credentials?

Dedoimedo

Windows do not provide drivers. NO THEY DON”T. Anyone who says/believes that they do just doesn’t know what they are talking about.

Drivers are provided to Microsoft by the hardware vendor. Ask Microsoft for a driver, they send you to the vendor. Install a new video card? You need to go to nVidia or AMD. Sound card? Network card? Same thing. Or they come on a cd from the vendor with the product. FFS people.

So how is a lack of drivers the fault of Linux?

saying it in plain english, so that everyone, also the folks with problems related to reading comprehension gets it:

nobody gives a fucking shit, if it is Realteks fault, nobody gives a fucking shit, if it is a lousy driver. But everyone notices: networking in Linux doesn´t work with a Realtek card. But in Windows, it works.

So who is to blame here? No, not Realtek. No, not Windows/ Microsoft. The only one is: Linux.

By the way: as Dedoimedo, I also notice a massive drop in quality in Linux since 2010. Nearly everything goes down the drain. But the only thing alive and prosper is: fingerpointing. Its always the others fault. All the evil companies around us are fucking the only good one in town: Linux. bahaha.

The only good one, Linux and all the related crap, is not to be critisised. The only one who is always wrong is the user, who is expecting too much, like connecting his smartphone to his computer… but the evil companies, like Realtek and stuff are guilty for that it doesn´t work, right? It cannot be the fault of lousy quality assurance (is it even done in Linux and the distros?). The reason cannot be the horrible development pace. And for sure, the reason does not lie within an incapable, stupid crowd of “developers”. right?

Using Linux since nearly 10 years now, I know what I am talking about. I am no fucking newbie who is masturbating all over his Manjaro. I see the daily shit in Linux and it is becoming horrible. My prediction is: 2020 we don´t have any usable distro out there. Beware of Wayland. This will be the last nail to be put in the coffin.

My impression is: the developers are either incapable, are just students who want to tinker around or they masturbate about the users who are desparately trying to get their boxes into operation after something broke again. Maybe a new fetish?

The truth is: you run a project = you are responsible. Responsible for the outcome, responsible for the users. Even if it is free. It takes time to understand that again. In the years before 2010, most of the projects had an understanding for their responsibility. But with the arrival of a lot of new people, this understanding went away. Lets wait until the ecosystem has cleaned itself again from all the nasty shit.

JUST STFU and buy a laptop manufactured this century u bump…

You need to improve your ability to read. The laptop is from 2015.

Dedoimedo

I’m reading the reddit comments. The level of reading incomprehension is unbelievable. People don’t even bother reading the text – the main comment is ‘running from live CD’. No, wrong. Everything is installed and tested thoroughly. Battery life deterioration. No, wrong. Windows 10 and MX-15 serve as a good baseline for that. It’s so elementary. The scientific method. How you measure and compare things. But yeah, pointless. That’s why Linux desktop sucks.

Dedoimedo

Always blaming the user; I feel like the community of Linux is part of the problem

In all reality there is no “Linux community” when it comes to Linux based desktop distributions. There never was and unless things change drastically, there never will be. All that exists is the community that surrounds each particular distribution and that’s pretty much it. And that comes from a Linux distro user of many years (Linux Mint currently).

It may be, despite all logic, that the scientific method simply does not apply to “Linux desktop”, especially when it comes to one person, one machine type testing. There’s simply too much of the human factor (and passion) involved.

It applies to everything.

Dedoimedo

So using you logic I have come to an opinion ( which is what you are offering ) …

That’s why you suck.

Dedoimedo, Could you please, please install and try Slackware and see how it works? That would be really interesting because Slackware is known for its stability and consistency and they don’t update their distribution every six months. Please do this and let us know.

FWIW, I think Dedo’s getting a bit of a bad rap here – sure, many distros don’t perform well on his HW setup, but I think all it really means is that:

1.) If you want to test and use a wider range of Linux distros, avoid his setup (or at least, avoid Realtek wireless network cards), and

b.) If you have a setup much like his, follow his guidance to the limited number of distros/desktops that he’s had success with.

Since my HW doesn’t match his, I can (pretty much) ignore his advice, but admire the way he writes, and his strict methods for testing. I’ve even had occasion to use the tips and tricks he’s offered on some subjects.

@Humphrey Chimpden Earwicker–

The only reason Dedo’s getting a bad rap is because most of the room-temperature-IQ commenters can’t rede gud, can’t thimk gud, and CAN’T REASON AT ALL!

All you mouth-breathers, go read–if you can figger out how to find it–“Reading comprehension is a big problem in open-source”, February 24, 2016, Dedoimedo. IT APPLIES TO YOU!

@Dedoimedo–Duh, like go get a new laptop, man. Like. We can’t go believing someone who runs what those pesky scientific types call ‘controlled experiments. Like.

The laptop is from 2015. Not everyone can afford to upgrade on a bi-annual basis.

I sincerely hope that your reply is aimed at those same rocket scientists my comment is.

By the by, it really doesn’t matter–the only salient point is that ‘Dedo’ uses the SAME HARDWARE so that RESULTS CAN BE COMPARED.

What is so hard about grasping this point, folks? This is what is known in learned circles (ooops! I think I’ve stumbled on the problem…) as a ‘controlled experiment’.

And, no, millenials, a controlled experiment is NOT a communist plot…

Ah, yes. I was confused as to what was a quote and what wasn’t in your original comment.

Disqus really needs a quoting mechanism.

You can use

” … uses the SAME HARDWARE so that RESULTS CAN BE COMPARED … ”

So do I. And my results are different then his. So your point would be, what?

This might be old news – the complaint regarding non-free elements has been addressed by taking them out of the ISO’s, but leaving them available for download from the repositories.

I can’t speak for the other issues raised in the article, but I know the download breach and other issues have at least caught the attention of Clem and crew…

It’s more the second and third points from that article. They’re

throwing stuff in Mint to make it a fuller-fledged install without doing

the work to ensure that it’s actually stable when done.

Basically, they’re so preoccupied with whether or not they could that they didn’t stop to think if they should.

Slow down – Linux? No. More speed-up, please. It will upset computer reviewers, & system-administrators. But please end-users. It reminds my job of replacing mini-computers with micro-computers, running dBase and SuperCalc. My users wanted it, but head-offices and our members of the Australian Computer Society tried to deny the users of their needs.

Distrowatch etc do not like my opinions, because I am too user orientated. The old experts do not want recognize that GUI-design has replaced RTFM, “experts”, forums, help-files, hardcopy, and professional qualifications. This week, Distrowatch’s Jessie Smith clearly explained why he has differing opinions to Dedo. Similarly, I also have differing opinions to both “experts”.

As Google and the internet expands, the “experts” can be proved to be inexpert, in hindsight. That is also scientifically to be expected. Computers are very rapidly improving and changing in all aspects of hardware & software.

Hardware now is forcing Seagate to sack many staff members from spinning-disks, and Samsung to expand its multi-terabyte SSD production. Faster and cheaper hardware units of every kind are so fast, that Linux kernels are trying to catch them: USB-2, USB-3, etc.

User expectations have also changed: touch-screens, voice-commands, facial monitoring, etc.

More rapid changes. More changes, please.

Slow down – Linux? No. More speed-up, please. It will upset computer reviewers, & system-administrators. But rapid evolutions can please the end-users. It reminds my job of replacing mini-computers with micro-computers (1980), running dBase and SuperCalc. My users wanted it, but head-offices and the members of the Australian Computer Society tried to deny the users of their needs.

Distrowatch etc do not like my opinions, because I am too user orientated. The old experts do not want recognize that GUI-design has replaced RTFM, “experts”, forums, help-files, hardcopy, and professional qualifications. This week, Distrowatch’s Jessie Smith clearly explained why he has differing opinions to Dedo. Similarly, I also have differing opinions to both “experts”.

As Google and the internet expands, the “experts” can be proved to be inexpert, in hindsight. That is also scientifically to be expected. Computers are very rapidly improving and changing in all aspects of hardware & software.

Hardware now is forcing Seagate to sack many staff members from spinning-disks, and Samsung to expand its multi-terabyte SSD production. Faster and cheaper hardware units of every kind are so fast, that Linux kernels are trying to catch them: USB-2, USB-3, etc.

User expectations have also changed: touch-screens, voice-commands, facial monitoring, etc.

More rapid changes. More changes, please.

When you used the quotation marks there for “experts” what did you have in mind? Are you trying to hint something?

Dedoimedo

The point is, there is no consistency. Here, quite a few say to use this or that distro and everything will be fine. That’s not a solution. Yeah, maybe Manjaro worked flawlessly for you and Slackware for you over there as well. Good for you. But I could turn around the same argument and say that these distros didn’t work out for me. Or that I had big problems with “everybody’s darling”, Linux Mint (that is the truth). I have a 2015 HP laptop, a 2011 Dell laptop and a 2016 latest Lenovo Desktop. I don’t even bother anymore with Linux. The point is, if I install (grudgingly) Windows 7 or 10 on a computer, any computer, I know as a fact that it will work. Sad fact is that this is not the case with Linux. And, please, don’t even get me started on Linux as a “Windows alternative” for friends, relatives, etc..Really? There was a chance around 2013-2014. Now, not anymore, unless you want to be on their speed dial list for 24/7 tech support.

It’s my opinion that most people should use ChromeOS or CloudReady as an alternative to Windows, not Linux. Linux was never based on the same principles as Windows as it was originally for researchers and enterprise, so to do many of the same things as Windows (like shutting down with a button click), it requires lots of ugly hacks.

Lol. To shut down my Linux systems, all I have to do is click on an icon and then click on “shut down”.

And by the time I can reach the power button on my monitor the computer is already off.

For most people using Linux means installing it on “bare metal”. When was the last time you installed any Windows on bare metal? I’m willing to be not everything “worked out of the box”. Last time I tried I had to download aprox 1GB of drivers to get most of it to work.

Even networking didn’t work. Not just the wireless, but needed ether net drivers also. Luck for me I had a Linux computer handy to download everything.

Updating to Windows 10? Or even updating Windows 10? Shot me. Just shoot me please!!!!!

So comparing a Linux install to a Windows install, I’ll take the Linux install thank you.

I installed windows 10 on an older Asus M4A78LT-M mobo with amd phenom2 processor and 8 gig cheap ram. All fixed drives are “spinning-rust”.. old, non-ssd. The system has run every version of FreeBSD 32 and 64. It ran OSX up to mountain lion, when apple decided to exclude amd entirely. It has run every iteration of windows from xp to 10. The system is old and abused. It runs every linux and FreeBSD I throw at it. Windows 10 performs poorly on this machine, (bare-metal of course) and doesn’t seem to run much better on any consumer grade machines I have seen. Linux has its’ quirks, some due to vendors not providing hardware drivers, and some shortfalls are present because Linux just sucks at some things. I am writing from MX-17 Linux 64, mostly out of the box. I am a long time Slackware user, I’m migrating over to a Debian based system, I can not stand linux-mint, or ubuntu. I am moving to systemd because too much relies on it today, and the shim doesn’t always work properly. I “stumbled” upon mx-linux while searching for a distro that would run properly on an old Dell 1521 laptop with a lowend processor and 1 gig ram, broadcom wireless. The system ran antiX 32 bit admirably, but mxlinux was tad too heavy. I liked many features of mx linux and using it right now on a phenom2 desktop. In summary.. I do not care what os others run, I use what I like.. I’m glad modern “nix’s” run on my antique hardware, and I can keep it updated with security patches

Very good article. I also think that they have to slow down. I like plasma 5.7 and gnome 3.22, yes, but… As you said there are still a lot of problem that haven’t been fixed. I have a Nvidia graphics card and I still have screen tearing with KDE, xfce and lxde. I know, it’s possible to fix that, but by default the problem is still there and developer did nothing to fix it. KDE still crash randomly. Dragging windows in gnome is like dragging butter, the window doesn’t follow the mouse properly. When I posted this on a Linux community the commented “the problem is related to the Nvidia drivers, not to Linux. ” well, but… Ubuntu 16.04 has no issue with the graphics card (but it still have issues with the new software center and… Many more… ).

I hate windows 10, but I know that if I install that, then it will just work.

I think that the most stable distros now are Debian and elementary. They are released only when they are ready. Then everything just work fine (maybe… ) .

Sorry for my bad English. I hope you’ll have a good day, bye!

>If things continue the way there are, Linux will just become irrelevant. No one needs a Microsoft-alternative operating system if it can’t do half the things that a typical Windows system can. We’re not talking about miracles. Basic expectations. Connectivity. Consistency.

The fact is, Linux probably won’t beat Windows in marketshare, but that’s okay. Linux will live. Did your laptop come with a Linux sticker? No? Then you can’t expect your hardware to just work well with it. You bought a Windows laptop. It’s only guaranteed to work well with Windows. That being said, my Dell Inspiron 1545 works fine with Linux and has simillar specs (T9600, 8GB of memory, Broadcom wifi, etc.)

You’re probably looking for ChromeOS or CloudReady. In fact, the reason I bought a Chromebook is because they run a Linux kernel, which means the hardware pretty much is guaranteed to be supported under Linux, and with Intel Chromebooks, it’s not likely they wrote their own driver just for the hardware.

“Did your laptop come with a Linux sticker? No? Then you can’t expect your hardware to just work well with it.”

I hope the developers of Linux distributions have more ambition than you do.

Their is only one area in which Linux has not gained a massive chunk of market share, and that is desktop. If you look at servers or supercomputers Linux has a monopoly over it’s competition. For instance Google, The Large Hadron Collider and the International Space Station run Linux.

TL;DR — I have bad experiences with Linux, therefore it’s dying.

Cool story, bro.

The author talks about a number of regressions on top of lingering bugs in Linux based on his experience reviewing a large number of distros. Your didn’t even bother to read the entire article, but you felt privileged to post a comment.

The timing of this article couldn’t have been better. I just finished getting my hands dirty trying to figure out why my LUKS-encrypted filesystems always get corrupted in Debian 8.5 after a few mounts. These encrypted filesystems always work fine in Debian 7.x (Wheezy) and 6.x (I’ve tested countless number of times). I migrated to Debian in 2010 mainly to avoid regressions and consistencies. Alas, it’s no different, apparently.

Though I use Linux exclusively, I understand the author’s criticism. The problem with the desktop Linux is the lack of unity, poor attention to average users and too rapid changes. GNU/Linux and some other FOSS software are mostly developed and maintained by mad geeky/nerdy people who love to have sex with command line crap, patch kernels, compile from sources and distribute only the sources. If you ask them for some help, they will reply you something – “Go and read the man page” WTF ?

Also FOSS software is often extremely fragmented. If you imagine Linux distro as a car, the parts of that car would be produced by separate teams which don’t cooperate with each other, only sketching their own little itches. And then the wheel producer decides to change the wheel diameter, the other one the cylinder size… without giving a ####. That’s why most distros feel like half assed and beta and your “buggy” Windows almost always works out of the box.

The only high quality and popular “Linux distribution” is Android, which is solely maintained by Google Corporation, not by a bunch of mad geeks. Unfortunately I’m not a fan of touch-screens, the productivity (especially typing) sucks…

I’m now using Linux Mint 18, which works pretty well on my PC. But will I recommend Linux to others to give a try?

For people who like to experiment with new stuff – YES.

For people who value their privacy, ability to control their PC and freedom – YES.

For others – UNFORTUNAETELY NO.

RHEL and SLES are also high-quality, but they go onto expensive servers, and companies pay a lot of money to run them properly supported. In the desktop game, you’re right. Almost nothing. Absurdly, a little bit of CentOS, and Trusty peaked as an Ubuntu release.

Dedoimedo

Fedor is basically RHEL. CentOS is RHEL. opensuse is also basically SLES. Yes they are community distros. But opensuse goes thru the same build process and uses the same build tools as SLES. RedHat also helps to support Fedora. And all are very good on the desktop.

One more thing, to help all those who struggle with the battery life report. The installed Windows 10 still offers about 5 hours, and MX-15 offers 4.5 hours. It is not the battery that is deteriorating, it is the new crop of lousy distros, combined with a growing inability by an alarming number of people to actually read articles in detail. Hence, not my fail. Now reddit it good.

Dedoimedo

I know you know this, but we all know that it seems as if you didn’t. I’m talking about the know-how. People are extremely defensive, especially when they don’t have a very strong point in a discussion. I don’t think it is specific to Linux, but in general to all people. If you write a text pointing out the weakness and problems in such a warflame-prone topic as “why Linux sucks”, you should do it by making sure any and all assertions are shielded by proper addressing of -at least- the most probable and lazy counter-arguments.

Note I said “you should” and not “you have to”. Alas, other people “should” be able to fully comprehend an objective criticism without having to resort to stupid excuses in order to avoid having their feelings hurt.

The battery is just one example, a whole class of replies could have been avoided by just a “not like in Windows, which as a reference achieves a whooping 4.5 hours”. Same with the point of consistency. This is a GREAT point, and not so many people focus on this. It is much better to fail consistently, than to succeed inconsistently. This should be repeated more times, but people who just read your criticism in this post, and didn’t follow your style and precedents, won’t understand much more than a simple “hey this sucks” instead of the proper “the hardware didn’t change and still the software is working worse than earlier”. It’s _this_ what sucks. I would love more people were talking in a serious and constructive way about how the software is consistently working worse on each iteration, regardless of the hardware, which has been always the same in your tests.

To address the consistency with a more easy-friendly approach the distribution “Stella” is quite nice, based on CentOS 6.7. Too bad they haven’t upgrade yet to a 7.x base. But it seems to me a nice project

I agree with Dedo here 100 percent.

My hardware is not the same as his, and my experiences aren’t always the same as he outlines in his reviews.

But, I do see the issues he’s collected here: Something works, then it does not work. That’s Amateur Hour. That should not happen.

It’s naive to expect people to inconvenience themselves in any way simply to support Linux ideologues. Only ideologues do things like that.

It’s juvenile, cowardly, and insulting to assault anyone who writes objectively about the Linux desktop instead of engaging in hive mind cheer leading.

Linux needs to fix its own problems. And that includes ending the lazy blaming of proprietary vendors for bad driver support.

I think that most of the hardware related problems (Wi-Fi, battery life, compatibility, etc) exist because there is no single mainstream hardware maker like there is for windows and for OS X. Objectively speaking, apple could not rely on made for windows hardware to run its freebsd based os, but they built their hardware. So it should be for Linux to have made for Linux hardware.

Dell and System 76.

Lenovo as well makes its computers fully compatible with Linux.

The problem with Lenovo and Dell is that they do not make it easy to buy any computer pre-installed with Linux.

The problem with System76 is that they are not mainstream enough.

A simple search of dell.com search the term “linux” leads to ….

http://pilot.search.dell.com/linux%20laptop

Which contains many laptops and a link to “customize & buy” and “add to cart” links. How much eaiser does it need to be?

The same search term at Lenovo also gets you there.

And no, System 76 is no Dell or HP, but Linux is all that they sell as an OS. And they are the largest Linux ONLY hardware supplier that I found.. No they are not in places like Best Buy, but then neither have I seen Linux there.

You have to look for it. Simply look for both and you will find a great deal of information.

Oh and in the Linux world, System 76 IS “mainstream”.

Thanks, i knew already.

Dell makes only too expensive devices that will not be commonly bought.

When i say System76 is not mainstream i mean System76 has not yet a market share like big hardware makers – like Apple, Lenovo, HP, Dell, etc. – have.

Don’t get me wrong, i use Linux and if it was for me i would obliterate anything Windows related from history just for the grief it gives to pc admin.

Well said. I waste at least half an hour a day, disconnecting/reconnecting the wifi. Not to mention about the frequent reboot since network manager stops working aftering a few enable/disable click. If you think about the wifi problems, one to three crashes a month seems nothing at all. And applications work, but majority are not so great or are available on other platforms. I don’t really know why I am using it at all.

I have found the issues are generally associated with old software. You need a system like arch which has fast updates. I have never had an issues with software on my arch system. After seeing where Ubuntu is going I decided to use manjaro as my beginner friendly disto of choice.

Well, you still need to buy your hardware with linux in mind. This hasn’t change since the 90’s but it got easier. Check out Ubuntu’s compatibility list. For me I have zero problem with linux on desktop.

It’s not very easy for an average user, and most of the hadware manufacturers usually don’t play the game.

Um, you can buy some really good hardware with Linux installed. Laptops, desktops, workstations and servers. Dell and System 76 are a couple that do. They come with Ubuntu installed. But just about any mainstream distro should/will work without any problem.

I see my experiences reflected in this article. I use three laptops: two Thinkpads and one Toshiba Laptop. Networking was perfect with all three of them in Ubuntu 14.04. In 16.04 wireless sometimes looses connectivity inbeween, regurlarly after suspend – all Laptops are hit by the regression, but in different ways. Xubuntu 16.04 showcases graphical problems with all Laptops, none of them are to be seen in 14.04, 14.10… Centos 7 works flawlessly on all of them. Linux Mint 18 cannot regulate the backlight of the Toshiba Laptop, Fedora 24 can. And so it goes on, those were just a few examples. I find the conclusion that the quality deteriorates to be true.

I have just installed and tested linuxmint 18 cinnamon on 3 different machines, an acer 772G, an asus 752vx and a lenovo yoga 2 pro with HIDPI and touchpad display. It just worked well! I can play all multimedia, connect easily to all my shares and have no network troubles whatsoever from the beginning on. No backlight problems, No suspended network connects. The only difference to your experience maybe is that it loaded tons of updates and some proprietary drivers after installation. As is typical with new software (like with games), the problems and bugs will be fixed over time or by growing expert knowhow in forums.

I couldn’t agree more! We need a damn stable, solid and consistent base to actually work!

we would also need a damn stable, solid and consistent windows PC without viruses for years to actually work? Where is it?

What you need from MS is your problem and I couldn’t care less.

The discussion here is: Linux makers must change its design paradigm, at least just some them, as we have gazillions of projects, focusing on deliver something more polished than the newest and coolest whistles and bells.

Once we had something like this with openSUSE, but they got bitten by this disease of “new is always better” thing. openSUSE Leap is, nowadays, pure crap. Maybe further releases put it on track again.

Yes I do pay in form of donation. Every year. If someone start a GNU/Linux distribution that deliver stability and consistency I would happily purchase it.

Well, if you are willing to pay for linux stability on a regular basis why dont you use an enterprise desktop release? openSuse never seemed to be focused on stability but SLES or RHEL are. If you insist on donationware most users are also completely happy with slackware regarding consistency of the “base”. Being stable, solid and consistent across a vast field of hardware / bios combinations requires either a radical approach and a fanatic community or a ridiculous amount of workforce like the uncountable number of MS Full-Time employees and Windows focused vendor hardware/bios engineers. What people like dedo dont (want to?) understand is that maintaining backward compatibility is extremely difficult and expensive to achieve and it offen contradicts featuritis.

Simply use RHEL.. it’s f*cking stable, solid and consistent.. Nothing breaks when you update and

when you have any problems, there is 24/7 support that will solve it for you.

It’s 300$/year and you are problem-free.. You people want quality and reliability but are not willing to pay for it. If you get your Linux distro for free, be grateful that someone did it for you out of goodness of his heart and stop bitching about how no one solves your problems.. You gotta pay first – developers need to eat as well you know.. 😉

As you didn’t spend your time to read the follow-ups, I will not spend mine to reply.

What do you mean by “follow-ups”?

I have just installed and tested linuxmint 8 cinnamon on 3 different machines, an acer 772G, an asus 752vx with nvidia graphics 950 and on a lenovo yoga 2 pro with HIDPI and touchpad display. Tell you what, It just worked well! Wtf, No hell in 2017?! I can play all multimedia, connect easily to all my shares and have no network troubles whatsoever from the beginning on. My other devices connect easily via web services or some retro usb-plugging and heck, i can still do anything i would want on W10.

The only tweak i used was to install with nomodeset option and install nvidia proprietary drivers and some intel microcode package afterwards via graphic interfaces. So, no hell for me just heaven!

I am now scratching my head thinking, what is more probable. Either i am an extremely lucky guy and its an absolute conicidence that anything worked out of the box, which is close to impossible OR

Something is wrong with the above article using terms like hell, frustration, public enemy, etc…

Some suggestions to get a more “scientific” reasoning about whats wrong here:

1. Igor, did you test your current Ubuntu (>=16.04) derivatives on any other machine besides your dreaded G50? What where your results?

2. Lets compare the tens of thousands of MS employees plus uncountable numbers of hardware vendor engineers working to get W10 (the OS which you must pay $XXX per licence from august on) running on all the hardware/bioses out there against the <100 linux employees working for canonical / mint / (still working for an OS without a price tag) etc.?

Isnt it wonderful that besides everything commercially blowing against linux in new hardware it runs so well on most PC's and embedded or mobile Hardware?

3. And now we get this "LINUX has to work nearly flawlessly on unfriendly hardware/bios combinations from the beginning on …" hybris-sort of articles. Igor, this is no scientific approach, there is no math to predict error rates/results on compatibility issues, just blaming linux for not running well on unfriendly hardware or buggy bios versions and using this "regressions, regressions everywhere" semantic is more close to FUD than to serious reasoning about reality.

The one simple conclusion here is: Linux on the desktop is still not for everyone, you still need to get some inside knowhow BEFORE installing blindly on unfriendly hardware. But even if you stupidly expect it to run on any ugly hardware out there you still have a good chance to get it running against all odds which is amazing.

Conclusion:

According to my experiences, this article is nothing more than one guys impression, which also changes quite often (roughly twice a year). In my view, nothing has changed much in the last years, Linux on the PC is still not for any User and MS can still hope to gain more than 1-2% market share against Linux/BSD derivatives on the mobile market without ruining NOKIA or paying for Linux-FUD (-:

I have been using Linux for 14 years, first Mandrake, then Xandros, and now Mint. All worked great, certainly better than any version of Windows I have ever seen. I also tried a few other distros (I currently have a dozen or more in VirtualBox) along the way, and all were fine. Several of my family members have converted to Linux, and a bunch of my friends have converted to Linux. All find Linux to be a great operating system. My church uses Linux, and it works just fine. I really don’t know what the author’s problem is.

The author’s problem is that at any unexpected point in time, whenever your family members and/or church users apply an automated or manual update to their systems, the systems may (or may not) stop working as fine as you have been expecting, in some random component, let it be hardware compatibility or software behavior.

The author’s example of shared folders shows this pretty well: some of your family members may have been using some shared folders configuration, which after an update gets broken for no good reason. You cannot update confidently knowing that everything that worked before will keep working afterwards. And that is the problem.

Of course the shared folders stuff was just one example of breakage which hit the author. Maybe none of your family members use this, not even you. Well, in that case you can expect this to happen in any other component, or maybe you get lucky and the lack of QA and bad policies that most distributions follow never get to hit you, in which case you would be lucky.

I myself am a very happy user of Mint 17.3 (Ubuntu 14.04 under the hood), it works reliably and very nicely as it is just now in my system, but I know that whenever I want to update to a newer Ubuntu, things will break… or not, which would mean that I got just as lucky as I can get, but in any case it wouldn’t be thanks to the good QA done by the distribution producer.

Do you think this is true if you kept to LTS releases? And only updated form one to the other. I find all Ubuntu LTS releases have been solid. I think part of the culprit is Linux users are too tempted to update, when Windows actually updates rather slowly.

How is that any different from Microshit OS?! Maybe you think that “somewhat working” which is most of the things on MOS is better than breaking in a nightly update and fixed in the next? Every update, could cause some damage irrelevant of the OS you’re using. Software engineers are not immune to errors, as aren’t we. We’re all human.

If Linux is dying why MOS is on it’s way of becoming the new Linux distro?

And we’re talking about comparison between shareware OS and freeware OS.

Why don’t you compare it to RHEL, or SUSE enterprise?

The author is effectively complaining about a change made by the Samba team to improve security by insisting on credentials for shares. In this way the Samba team or improving what has always been a very suspect and insecure Windows technology. If he is not interested in security he can always continue using Windows

Ignorance and laziness are the problems that most computer users have. They’ll open anything. They’ll got to any website. They won’t do even the minimum to keep their computers or selves safe. They outright refuse to back anything up. Or even keep their antivirus/malware software up to date.

It’s that simple.

Bruce Neale

Unfortunately I must agree 100% with Dedoimedo’s findings.

I have been using Linux off & on since Xandros 3.0, which was easy to install alongside Windows & probably much easier to use. The Xandros book covered most issues & we used it happily for a few years, until necessary security updates compromised things.

I used Windows XP for a long period because the Software I needed was readily available for it & it saved me time.

Today most of this Software, would be available as free software & be better quality.

I have always installed Linux Distros in multi-boot with 3 or 4 together.

Previously I would expect to try 5 or 6 Linux Distros to get 3 I was happy to keep for usage.

I have mostly used a version of Mint from 10 onwards as my main Linux main distro in multi boot with Windows.

The original IBM T41 ThinkPad works with LMDE-2 + AntiX MX-14.

The ASUS Pundit PH1 has Linux Mint 17.3 now + Q40S + AntiX MX-14. These have all survived the various upgrades until today.

This PC is rather old, but had a Hard-Drive death long before it should, so it was unused for some years.

I built up a second PC from New parts I had been collecting & used Windows XP mostly until it was well past its end of life.

Late last year I decided to not go past Windows 7, so I researched what was available from the recent Linux options.

I have more than 30 each of 32-bit + 64-bit DVDs of Distros since 2015.

I have not used Virtual Box for a long time, so every install attempt is direct to a hard drive.

All these distros have now been installed with 2 partitions each > 1 for ROOT + 1 for HOME + some space at the start of dev/sda for BOOT + swap.

I have tried 5 or 6 times to get a 64 bit pc for Linux only. NONE have worked for any length of time. This is one example:

# I have an ASUS P8Z77-V-LX2 motherboard, which had very old Bios.

The Motherboard appeared very good, but I tried quite a few Distros with the original Bios, but nothing wanted to work, so I downloaded & installed the latest Bios & reset the defaults.

The Network Stack is ‘Disabled’ & Secure Boot is set to ‘Other OS’.

I have tried about 20 recent different 64-bit Linux Distros from DVDs.

Various Mint 17 & LMDE-2, Ubuntu 14.04.3, Peppermint 7, Lite 3.0, Zorin 10, Q40S, Elementary, etc, etc, etc.

Most do not any attempt to install, but any that do fail at the Keyboard or either lose the mouse (both cable & wireless) or do not recognise any Mouse. I have German Keyboards. I try to use the pre ‘sysD’ Linux versions, if I can.

Neither of my 2 GParted CDs would work at all.

Normally either LMDE-2 or Mint 17 series will install on any of my old 32-bit computers, so I prefer to use whichever works.

These load to the point of giving a password, but dont recognise a Logitech keyboard. (K270, K520)

All of the listed Distros have been tried multiple times over Days or Evenings.

The only DVD that will Install correctly was Vector 7.1 STD Final 64-bit.

This allowed full use of their GParted to Rework the 1000 GB WD hard drive for Multi Boot. (Root + Home Partitions for Every Distro)

The computer boots normally & loads the Distro correctly.

At some point this also locks up with Mouse usage.

As this is a non ‘sysD’ Distro, I downloaded & Installed both Devuan-Jessie-1.0-beta-amd64 + AntiX MX-15-July-x64.

These both Install, but at some point lose the Mouse (Wired or Wireless Logitechs). These Keyboards & Mouse work on the 32-bit PC like this old ASUS with Mint 17.3.

I have both Windows XP & Windows 7 DVD,s, but I would rather not go down that road.

# I have an AMD AM3 Motherboard – Gigabyte GA-MA770T-UD3

This had Linux Lite 3,0 (which I quite like), AntiX MX15.1 & Q40S, all running fine for about 10 days, until it had a Hissy fit just like Windows 7 had a few months earlier. My wifes Windows 7 had the same problem, but hers is still fine today.

# The only Distro that has been installable on all 3 of the old 32-bit pc is AntiX MX14 + 15 series. (ASUS Eee PC 1000H)

Any Distros that wipe out or take over the Boot loader dont get used, unless I am desperate!

# My problems should be very simple to rectify. Now is the time to start my Slackware journey.

Slackware 14.2 appears to be installable & LILO has worked fine in the past with Salix & Vector.

The Morals of the story are:

1. Dedoimedo is 100% correct with his information.

2. Older good quality hardware sold in large numbers will be supported by someone.

3. Linux Distros that were very good to excellent, cannot be installed in 2016.

4. Linux problems should be easy to resolve. They are exactly the same we all have, we just have to make a small change to the way we think.

best wishes

Bruce Neale

It all started with the new desktop wars, for lack of another phrase, the move to GNOME Shell and KDE4 and the resulting further fragmentation into MATE etc. Then PA and systemd. The constant moving target thing and resulting instability is enough to put you off if you’ve known better. New alternatives come along, and some old, sane ones remain. Dare I say Slack/BSD?

The day Linux dies (which it won’t, it has too big of grasp on the market share) will cause a lot of causes in the world of technology.

There will always be a percentage of people who try Linux and fail, they don’t have the ambition to learn how to manage a Linux system properly without 24/7 telephone support. Those of us who take pleasure in learning how a computer system works end up with a reliable system. There is a reason why 498 of the worlds top 500 supercomputers run Linux.

Those who don’t want to learn or can’t learn can return to Windows and enjoy the BSOD and system freezes and viruses which calls to the 24/7 support fail to resolve without a reinstall and loss of data, it’s up to them.

“There will always be a percentage of people who try Linux and fail, they don’t have the ambition to learn how to manage a Linux system properly without 24/7 telephone support.”

Typical Linux zealot.

“Those of us who take pleasure in learning how a computer system works end up with a reliable system.”

Typical Linux zealot who wants Linux to stay the nerd, hobby, tinkerer’s OS.

“There is a reason why 498 of the worlds top 500 supercomputers run Linux.”

Yes, and it’s not because it’s good. It’s because it’s free and can be tinkered with.

“Those who don’t want to learn or can’t learn can return to Windows and enjoy the BSOD and system freezes and viruses which calls to the 24/7 support fail to resolve without a reinstall and loss of data, it’s up to them.”

Typical Linux zealot who can’t admit that BSODs are relatively a thing of the past, can’t admit that Linux could ever freeze up, can’t admit that Linux has viruses, can’t admit that tech support for Windows could ever work, can’t admit that Windows problems can be resolved without reinstalling, can’t admit that Windows problems can be resolved without loss of data, and who can’t admit that Windows just works.

” … “There is a reason why 498 of the worlds top 500 supercomputers run Linux.”

Yes, and it’s not because it’s good. It’s because it’s free and can be tinkered with. …

Lol. It’s not good? And yet they run clusters that cost 10’s of millions of dollars on it? Whow! What ignorance and arrogance.

They use it because unlike Windows it can scale across 100’s of thousands of processors, unlike Windows. They use it because it can be modified, unlike Windows. They use it because with it they can spend the money that Microsoft wants for more hardware.

They use it because unlike Windows it works. Because it is good.

As to your “typical Linux zealot” comment, in your last paragraph. I can and do admit to all those things. Yes I do. But you can’t admit that Linux is better then Windows for many, many things

But you can not admit that for things other the the desktop, Linux is by far the better choice. You can not accept that fact, and it is fact,