I love Linux. Which is why, whenever there’s a new distro release and it’s less than optimal (read, horrible), a unicorn dies somewhere. And since unicorns are pretty much mythical, it tells you how bad the situation is. On a more serious note, I’ve started my autumn crop of distro testing, and the results are rather discouraging. Worse than just bad results, we get inconsistent results. This is possibly even worse than having a product that works badly. The wild emotional seesaw of love-hate, hope-despair plays havoc with users and their loyalty.

Looking back to similar tests in previous years, it’s as if nothing has changed. We’re spinning. Literally. Distro releases happen in a sort of intellectual vacuum, isolated from one another, with little to no cross-cooperation or cohesion. This got me thinking. Are there any mechanisms that could help strengthen partnership among different distro teams, so that our desktops looks and behave with more quality and consistency?

Where are we now?

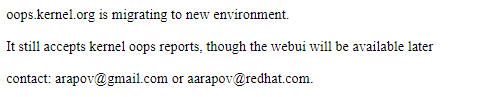

Remember kerneloops.org? I first mentioned this site in my Linux Kernel Crash Book back in 2011. It’s a sort of central repository that lists kernel crashes across multiple distro editions and kernel versions, acting as a unified database for problems related to the core of the operating system. To the best of my knowledge, it is the only such resource in the wider Linux world that crosses the boundaries of particular releases. There’s nothing else truly “cross-platform” in this regard.

And it’s getting worse.

The Linux desktop seems to be regressing. The latest Ubuntu is an example of time travel back to times before the enthusiastic attempt by Canonical to make the Linux desktop big. In the past, distributions had various mechanisms in place to report kernel crashes (application crash tools are still there), and upon completed installations, some of these distributions would also send a hardware report. It wasn’t perfect, but it was something. Unfortunately, we see less and less of this every day. Even kerneloops is on the blink at the moment. For a while now, the site has been undergoing maintenance, and the UI portion is not available for use. Not a good sign.

Here’s my proposal

Ranting may help keep the spirits up, but it won’t fix things. Hence, I have a few practical suggestions that may give us solid, stable desktops that do not regress in between releases. This is not a call for action, a political speech, or anything of that sort. Just an honest attempt to see more quality and consistency in the Linux desktop.

Wholly owned stack

Blameshifting is one of the most common evasion tactics in the corporate world, and it also affects Linux desktop distributions and associated software. The basic concept is: when faced with a possibility that some software code you own may be fault (there’s a bug that needs investigating and fixing), try to blame someone else for the failure.

The best example from day-to-day life is when you call your ISP to complain about network speed. Even if you’ve done your due diligence and tested everything, they will still tell you to reboot your computer or replace your router or something. True, for a large number of people, this may be the case, but the attitude is prevalent, and then, when there are genuine issues on the vendor side, they get ignored, sidetracked or lobbed over the fence.

Linux distributions are not wholly owned stacks – they are a combination of some unique parts and lots of components taken from other places (let’s call it upstream). Ideally, the distribution team would own everything and be accountable for what happens from the app to the kernel and back. Alas, this is not the case, and so, if you do report a problem, it will often be relegated to the “owner” of the tool, which could be a library developer half across the world, a part-time student volunteer with no desire to meddle or change their code. If you’re lucky, you’ll land on a component that belongs to a professional institution, including the likes of Novell, Red Hat and Canonical, in which case you can expect a resolution. Even so, there’s no guarantee.

Looking at the enterprise world, we can see that the full-stack model is obeyed and followed religiously, with companies offering full support (for what they own) for many years, sometimes even more than a decade. The stack isn’t big, but whatever is inside it gets proper ownership and accountability. This works beautifully, and it’s one of the reasons why Linux has found a happy home in many a multi-billion-dollar organization.

The Linux desktop does not have this luxury – and the problems users face and report rely mostly on chance for being resolved. The blameshifting syndrome (it’s a syndrome because it’s a human emotional condition) also takes form in the burden of proof (innocent until proven guilty) resting with the user. In other words, not only do users have to trouble themselves with reporting issues, often a tedious manual process, they are challenged by product owners with no direct accountability or affiliation to actually prove that the code is faulty in some way. Users end up running tests, collecting logs and spending a lot of time trying to act as an intermediary between bad user experience and software development. This is not a healthy or efficient process in any way.

The start to unraveling this problem rests in creating fully owned software stacks. This brings a whole new range of other issues with it. For instance, if more than one distribution decide to bundle a particular application in their bundle, who gets to be the owner? Say Firefox. It is the default browser in most Linux distributions, but none of them actually own the code. So if there’s a browser bug, what happens then?

The wrong answer is a non-deterministic one. The right one – if we follow the wholly owned stack model – is that distro owners are responsible for issues with the bundled software, even if they did not write the code themselves. Hence, Firefox (or Mozilla) only comes into play after the distro teams takes ownership of any possible issues, and handles it through back channels with the relevant parties. The important thing is, from the user perspective, there’s only one address, and that’s the distribution team.

In the long run, this will help distribution teams wean out software that gets no active development, and even more importantly, no active maintenance, and narrow down the choice to products that continuously improve through their life cycle. Of course, there’s the philosophical question of SLA, support time frames, expected results, and more, but that’s not something that can be easily solved. Not the topic here.

PEBKAC

Today, error reporting on the desktop takes two forms: 1) for problems that are deterministic, usually application crashes that generate a crash core and/or trigger a signal that lets the system know something has gone wrong, there’s a semi-automated process of sending the report back to the vendor 2) for issues that are not perceived as problems by the system (like visual bugs, odd behavior, slowness), users need to manually report these to whoever they feel is the right address – the vendor or the app developer, both, or someone else entirely – and then follow through the blameshifting tribulation and error collection exercise that is entirely manual.

The problem with problem reporting is that the process is not reliable. Users are NOT reliable. There’s an almost infinite variance among users in terms of their skills, needs, usage patterns, abilities, patience, problem solving, and dozens of other variables that determine what will eventually be reported. In a wholly owned stack, you cannot rely on random goodwill to fix issues and/or produce consistent results, because the errors themselves will come with an inconsistent flair. The human factor must be removed from the equation.

Automated and intelligent reporting tools

I will now try to elaborate on several mechanisms that I believe should be present in every operating system, and should be active at all times. Yes, we touch on the concepts like telemetry and privacy and tracking, and this touches a sensitive spot, but then, it is possible to do this in a completely anonymous way, with zero personal information, and still achieve the desired results.

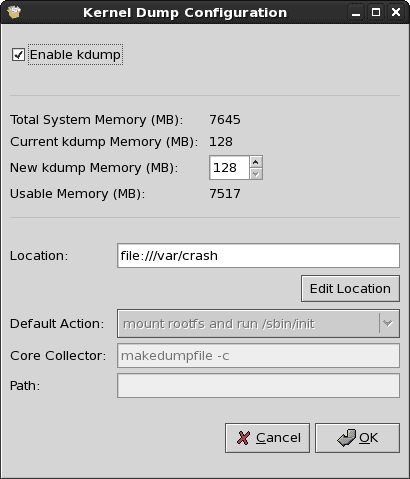

Kernel crash collection

This is a first must. Kdump should be automatically configured on every system – without asking the user to set up or change anything. Since this is nerdy territory, there should be no expectations that users understand the importance of the process. And as I’ve outlined in my 2012 Linux Journal article and 2014 LinuxCon presentation on this topic, it is possible to report kernel problems in a very simple and fast way. Effectively, it takes a single string (exception RIP + offset) to generate a unique kernel crash identifier and send it to the vendor. It contains zero personally identifiable information, and it’s a great starting point to allow initial investigation.

Now, let’s get innovative. We expand the error reporting to include some additional basic pointers, and then, if we do this is a central manner, we can then start deriving some intelligence from the data, figuring out types of hardware versus crash reports, kernel versions versus crash reports, and many other dimensions. With a smart backend to continuously monitor and alert on the global Linux kernel behavior, we can then focus on fixing critical issues, working with hardware vendors, and more.

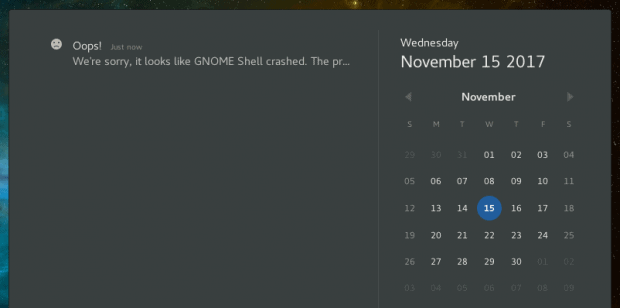

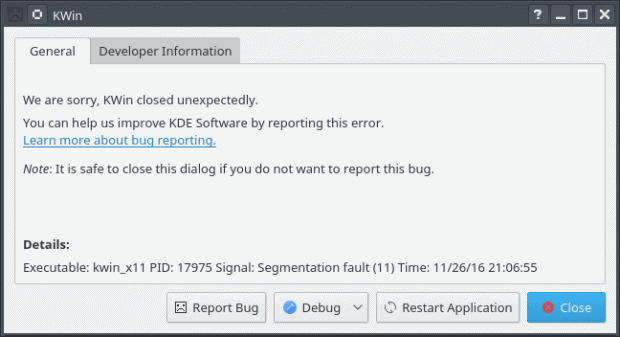

Application crashes

This is the second one, and it’s already well covered. But even so, today, users get an alert from application reporting tools. Gnome, Plasma, you name it. The issue is, it’s a hassle and distraction. Then, users need to manually approve these reports. Sometimes, distros also need to download symbols, and there might be another step or three in the process. Unnecessary.

It is possible to do all these steps in the background – and perform error reporting in an elegant way that does not interfere with what the user is doing. Since privacy is important, sending complete cores is out of the question as they may contain memory pages with sensitive data, but the trace itself is already more than what you’d get from a lazy user choosing not to do anything with the problem.

Non-determined problems

Let me give you an example. On my LG RD510 laptop, it is impossible to resume from suspend. This has to do with the AHCI mode for ATA drives, which get link resets, and effectively, you must cold-boot the system to get back to a working desktop. In this case, automated tools won’t really work because the underlying disk is no longer recognized, and it is impossible to write logs, but also, the condition does not satisfy trigger kexec and starting a new kernel as in the case of a kernel crash – although this is a viable possibility.

However, what the system COULD do is track the state of its actions. Determinism is the key. Validation of input and output. The golden rules of software. If an operation starts, it should end. And if it does not end, it means we have a problem. Technically, something like this could be described in the following way:

- The laptop suspend action is activated in some way

- The system writes a file to the disk (atomically) – could be an empty file named SUSPEND

- On resume, the system checks for the presence of the file, deletes it and writes a new one called RESUME_OK

Let’s go back to my laptop and its resume issues. When the system boots, it can run a series of basic health checks to see whether the previous session had completed gracefully (reset versus reboot, for example). One of the checks may be the presence of REBOOT, SHUTDOWN or SUSPEND placeholders to figure out how the last session ended. The presence of a SUSPEND file in a freshly booted session might indicate that the system did not resume correctly. This may not tell us what had happened, but we would at least know that the resume was not successful.

Enter data intelligence – collect and monitor suspend & resume information over several sessions. Likewise, we could monitor network connections, reboots, or whatever else we fancy, and then check if the system has had any interruption in its normal activity. That information (a simple bitmask of operational states) can then be reported to the vendor, and again, similar to kernel crashes, we can compare it to anonymous information like hardware, kernel versions, desktop environment versions, drivers, libraries, and more.

If privacy is really crucial, then the intelligence module (some service) could run locally, use a local and encrypted database, and runs its checks without any connection to the mothership, and then potentially alert either the user (or whoever) that the particular hardware type or kernel version or anything might not be compatible, or that certain actions cause problems.

The metrics could also include all the kernel modules and the associated hardware, temperature and fan sensors, CPU behavior, all the drivers, everything. It would help generate a much clearer picture of what the desktop does. Again, this can be done without any commercial angle – no personal tracking to generate custom ads, no global big brother databases of user behavior patterns. Just pure technical data.

Be tough

The second side of the coin is actually NOT doing any actions on platforms that are known to be incompatible. Going back to the resume issue, if the distro “knows” that it cannot reliably suspend and resume on a particular hardware type (and whatever other combo of components causes it), then the system should prevent the suspend action until the issue is resolved.

I would actually prefer for distributions to refuse to install on “buggy” hardware rather than complete and then end up with a wonky session that cannot be reliably used. Looking back at my tests in the past decade, I had Realtek Wireless issues on my Lenovo G50 laptop, Broadcom Wireless issues on my HP laptop, Intel Wireless issues on Lenovo T400, suspend issues on the LG RD510, occasional Intel graphics problems on several machines, and the list goes on. Some of these could be resolved with tweaks – essentially modprobe fixes, which beg a question, why are these not offered/included with distributions, and/or if these smart tools were to exist, could such fixes be offered? But some could not. In that case, the system installer might actually give user a choice – do they wish to proceed with an installation, knowing they could have problems that do not have known workarounds or permanent solutions?

And here, the live session can immensely help determine what gives. Most of the problems happen both in the live and installed systems, so a smart data module could compile the necessary list of known bugs and use them to determine whether the installation ought to be performed, or prompt the user. This is better than bad user experience.

Bugzilla? WHICH Bugzilla?

Now let’s consider the option of bugs and issues being reported automatically, with this or that amount of anonymous data. Where should it go? We already know that the issues ought to be channeled to distribution owners, but then if a problem affects a component that’s present in other distributions, other teams may not see it.

I believe that individual project bugzillas – whatever they may be, a forum, a GitHub page, etc – can remain around, but they must source their data from a central database. Imagine if all issues were uploaded to one system. We could then run intelligence reports that cross distro boundaries. We could focus on ALL Plasma or Gnome issues, not specifically Kubuntu or Antergos or Solus. And if there’s an issue in gstreamer or libmtpdevice, we could see how they map across multiple distros or desktop environments. It is very difficult to achieve such level of situational awareness with existing tools. The fragmentation is not just in the end product. It’s everywhere.

Project leads could then focus on fixing what they own – any which database filter will allow selecting the right data – but a central mechanism, similar to kerneloops.org, could help understand better where problems lie. And then, we might actually see TRUE COLLABORATION in the community. More people to look at issues and offer suggestions. An extra pair of eyes to validate a fix. More brain diversity. More. Period.

This could potentially reduce rivalry among teams, and minimize dependency on individual software engineers leading projects. If people leave, there would be less chance of projects going dead. People might actually develop sympathy or understanding for their colleagues and their work, and in the long run, the very fact there’s consistency in how problems are reported and seen could lead to consistency in how they are resolved. It would be chaos at first, but in the long run, it would lead to more order and quality.

Beyond 2000: Every problem must have a trail

Crossing the distribution boundaries brings in a whole new range of possibilities. If there was a way to work on problems in a unified fashion, perhaps there’s a way to work on making sure problems never happen. From a purely technical perspective, a resolved bug must never resurface. Likewise, there should be a way to test the resolution.

Every issue and its closure should become an entry in a validation suite, used to determine whether a distribution releases passes the QA and should be sent out into the open. I do know that distributions test their software, and usually follow the Alpha-Beta-RC model, but again, there’s a huge amount of human interaction and inconsistency in the process – which leads to inconsistency in the user experience. There’s also a significant duplication of effort, because essentially identical components are re-tested across many distros because teams work separately on their products.

But the same way there’s POSIX, there could be UI-POSIX, which goes beyond API and touches on every aspect of the desktop experience. It should be reflected in methods and procedures, and it should also mandate how software ought to be tested, released and maintained. There’s a huge element of boredom in this – maintaining code is not sexy or exciting, but it is the bread and butter of good, high-quality products. Fixing bugs is far more important than writing a new version of a faulty program. The current model does not align well with this, as most of the user-space code is written by maverick individuals. But we have the kernel as the example of immense commercial success – with 80% contributions done by corporations and enterprises.

We can start small – a validation suite that tests ALL distributions in the same manner, regardless of their desktop environment and app stack. The suite includes deterministic tests and covers all and every bug ever seen and found and resolved in the past, linking to one database. The next step is aligning validation procedures, based on the obvious discrepancies that the validation suite will discover.

In the end, we could still have color and variety, but products should be developed to the same high standards. Think automotive industry. You get tons of car manufacturers and models, but they still adhere to many strict standards that define what a car should do, and in the end, you essentially get a consistent driving experience – I don’t meant that subjectively – the cars all behave the same. Linux distributions could potentially achieve the same result, in that they all give the user the same essential experience, even if details may vary. But there are many fundamental aspects that cannot just be arbitrarily chosen or ignored the way have it today. Take a look at any which five distro reviews I’ve done in the past couple of months and you will see what I mean. Heck, just compare Ubuntu Aardvark to Kubuntu Aardvark!

Once we get to this common language, the next step is to maintain – and evolve it. The presence of automated system health tools will guarantee that distributions continue with a consistent experience after their release. It’s not just the GA release that matters. There will be new code introduced with every update, and that must also work perfectly and WITHOUT regressions.

Conclusion

My Open Linux idea is not the first nor the last benevolent thought to have sparked in the community in the past two decades. I know there have been attempts to bridge some gaps, but there has not been a comprehensive approach to the whole fragmentation mess that is plaguing the desktop world. I truly believe that the Linux desktop can become better, but it requires a unified baseline. At the moment, the foundations are weak. And the proof is in the pudding. The Linux desktop remains a marginal concept with barely any market share, low quality, and constant regressions that keep it from becoming a serious contender against the established players.

The notion of community is mostly a bittersweet dream. Linux has succeeded by having strict hierarchy and a strong commercial model. Look at Android. There’s another example. The desktop as we know it lacks these fundamentals. Perhaps we don’t want it to become a commercial entity, and that’s fine, but it does have to become a professional product. And to be a professional, you must act like one. It starts by having a first-class understanding of the system and being fully in control of its failings. We don’t have that at the moment. Linux distros may be open-source, but they are not truly open. Hopefully, this article could be a beginning of that change. Food for thought.

Cover image: Courtesy of nasa.gov, in public domain.

[sharedaddy]

Agree but QA/QC and easy to use polished and consistent UI require hundreds of millions if not billions of dollars. Desktop Linux is very hard to monetize, it’s the curse of being free and open source. Things like QA, bug fixing, preserving compatibility, benchmarks, etc are not creative, are not fun and have no appeal to amateurs but must be done in order to make Linux usable for the average Joe.

I see 2 solutions to this problem:

1) Android / Chrome OS way, locking down and selling services, which I don’t like much.

2) Use a new sort of open source license which allows the original author to require money for distribution and commercial use, which IMHO is the best compromise between freedom and fairness.

My comment was detected as spam; it wasn’t. I’m a real person and long(ish) time Linux user.

This isn’t specific to Vector Linux only, Mint, Debian and some other distros do this too. The classic opensource “user is a tester” method is insufficient for a good product because the only people who use/test Linux are those whose time is worthless. Most people want a working product.

The only solution is money and stringent QA.

I think some, if not most, Linux testers are people who like a particular distro and want to see it succeed; that was the category I would say I was in with Vector.

I don’t do much testing nowadays though, partly because I have less time than I once did but also because I didn’t find that the faults I identified were being corrected.

Linux amateur here (posting from Salix, a good distro which is based on Slackware 14.2);

I think Linux’s curse is the other side of its blessing; its massive variety means that teams of dedicated and talented people will work on half a dozen similar but unrelated projects in parallel instead of one (as in the case of Microsoft).

How good would KDE or Gnome be, for example, if they didn’t have to compete with each other and XFce? Do we really need literally dozens of competing distros like Slackware, Fedora, Gentoo, Arch, Debian and Ubuntu, and distros derived from them? The answer; probably not, but each distro has its advocates who think that if and when it comes to the crunch, theirs should be the one to survive.

I love Linux and have every intention of continuing to use it on my desktop computer, but surely it must be obvious from the problematic distro reviews I keep reading that desktop Linux has a problem and good minds should be brought to bear on how to solve it (or them).

Would motre money be the answer? If so (and I’d say it was very likely), maybe one solution would be a subscription model, where users paid something towards their favourite distros as well as the Linux developer community as a whole; cheaper than Windows (obviously), but enough to make good programmers think it was worth their while to work on Linux.

Linux amateur here (posting from Salix, a good distro which is based on Slackware 14.2). I’m not competent to comment on the technical arguments Dedo makes in his article, so I’ll confine myself to more general comments.

I think Linux’s curse is the other side of its blessing; its massive variety means that teams of dedicated and talented people will work on half a dozen similar but unrelated projects in parallel instead of one (as in the case of Microsoft).

How good would KDE or Gnome be, for example, if they didn’t have to compete with each other and XFce too? Why the continuing lack of standardisation of Linux packages so developers have to deal with deb, rpm and the Slackware package format (whatever it is now)?

Do we really need literally dozens of competing distros like Slackware, Fedora, Gentoo, Arch, Debian

and Ubuntu, and distros derived from them? The answer; probably not, but each distro has its advocates who think that if and when it comes to the crunch, theirs should be the one to survive.

I love Linux and have every intention of continuing to use it on my desktop computer, but surely it must be obvious from the distro reviews I keep reading (mostly from Dedo himself, it must be said) that desktop Linux has a problem and good minds should be brought to bear on how to solve it (or

them).

Would more money be the answer? If so (and I’d say it was very likely), maybe one solution would be some kind of annual dual subscription model, where users paid something, say $25 a year in total

(about half a dollar a week) towards their favourite distros as well as to the Linux developer community as a whole. The final product should be much cheaper than Windows (obviously, because it’s almost bound to be a less rounded and feature-rich product than a Windows release with all the money Redmond has to spend), but cost enough to make good programmers think it was worth their while to work on Linux.

As long as the majority of people who use desktop Linux continue to think they shouldn’t have to pay at all, I think the Linux distro world will continue to go nowhere fast.

Deeply obvious. it’s something that open-source AND very successful tech companies have to watch out for. I call it “googling” – “Oh, this is so cool, I’ll work on it till I get bored.”

The full stack model only works if you have the resources to support it. This is why you see it used with big companies, but not volunteer projects. And that’s what most Linux distros and DEs are: volunteer projects. The exceptions are SUSE, Canonical, and Red Hat. The closest we get to a Full Stack Linux is RHEL with the GNOME desktop. But notably, you have to pay for it (full-stack ownership and support isn’t cheap!). And even then, GNOME has problems with resourcing. It takes a lot of effort to maintain their stack, which consists of Glib, GIO, GVFS, GTK, GNOME, and a whole host of FreeDesktop projects that they basically control. It’s a huge amount of work and their projects often suffer from too much code and not enough developers. It’s not just them: everybody suffers from this.

For projects without a lot of commercial backing, I think the best we can hope for is inevitable centralization as marginal projects die off due to bit rot. Eventually we’ll be left with a few winners that everyone grudgingly agrees to standardize on simply because they’re the last ones standing. There are a lot of distros and DEs that simply don’t generate enough developer interest to keep moving without becoming buggy messes.

Good post. I’ve tried CentOS, which is an open source distro based on RedHat, and whilst it works pretty well oin the whiole it seems to be gquite poaretuicular aboyut what soiftware it woirks with – Chjrmium wouldn;t work with it, or

Thank you Igor, one more excellent down to the earth realistic approach to the problems Linux desktop faces and I get it when you say we’re back to 2005. Unfortunately, it happened with Ubuntu. Oh Well… we need a “war room” with all the responsible people to solve this. (War Room is a reunion involving the main representatives of Linux world to get the crap together: Mark, Linus, RedHat CEO, CentOS leader, Debian leader, etc.).

Technology accelerates the evolution and it is something that happens all the time everywhere. Linux has contribute extremely to technology especially if it is the dominant of the 500 Supercomputers.

Linux needs a universal tool which can help to solve issues automatically.

As mentioned in this article not to suspend if this function will not work, and at the same time to send a bug report to a common place where devs and users can watch and deal with it.

Unix mentality do one thing and well is not wrong, just our development and needs should focus to a tool, which will help this Unix mentality by watching, inform and if possible without to disturb the other functions of the system to try to solve it by trying re enable its functions.

For example when you try to load your graphics and the driver is not loaded, then to initiate a Vesa driver where the system can load and function decently but not ideal, where at the same time will send its bug report and message to user where to go for further assistance.

Or when an upgrade of kernel happens The tool not to allow the kernel upgrade if the wireless driver will not be loaded.

This can be done if the system loads the kernel in a tested environment like grml to check if the basic functions are good and then to proceed the installation.

Tools are there to make our lives to function better and efficient.

It sounds scary and weird but slowly we become a Star trek Borg society, where the biological and mechanical components will be together.

I think it can be done. Didn’t ms turn its consumers into beta (or alpha) testers? It set up to field the feedback. Maybe using the usual social media suspects, a group of gatekeeper volunteers (qualified to participate) could pick a/any linux distro and ‘announce’/’advertise’ testing with [sincere] committed assistance (to the average … like me) for that distro. The requisite for download of the distro would be willingness to provide feedback with all pertinent data. Because of the social media aspect, and if the model is at all successful, the competitive (some prefer cooperative) nature of mankind will take over to extend participation.

Or, promote the whole thing as a chest thumping contest to cross a threshhold. Tap into that urge to get off of the sidelines and compete. Make it accessible so that those that can will not be able to stand it, and have to jump into the fray (rather than remaining boxed in so to speak).

But, yes the feedback will ultimately provide cross platform and cross os information and begin to resolve each aspect you mention above, including winnowing.

Aside: I just live with a lot of applications that don’t work, but it would be nice otherwise for a change.

It is just frustrating that every new distro release is a gamble. You have one good release and then some lousy follow-ups. There is no growth of quality visible. And the whole Linux landscape seems to be dominated by geeky egomaniacs who have zero interest in producing top-notch products but causing one flame-war after the other – and all that under the pretense that free software must remain free. If freedom means here that Linux desktop distro publishers can mess around with the user-base then the Linux desktop will never leave the niche of a few enthusiasts.

And therefore YES, we need a new starting point which is ruled by workability and pragmatism and not by ideology and egoism.

Install Arch Linux – no semi-annual disappointments – as its a rolling release. I’ve been using it for almost 5 years. If you read the Arch Wiki and follow the instructions there you won’t go wrong. Every weird piece of software that I’ve looked at is available in the AUR. I’ve only had problems installing once or twice from there and I have almost 2000 packages on my main computer.

Hi Steve, I tried Arch Linux some years ago and it was a pleasant experience. If the next Ubuntu LTS version won’t fulfill my expectations I will give Arch Linux a more serious try. Thanks 🙂

Hi Steve. I tried ArchBang once and it was fine – great, in fact – until I tried to upgrade it and promptly broke the system. Apparently you need to be very careful about upgrading an Arch or Arch-derived system (something I had to learn the hard way).

Widespread adoption of Flatpak is probably inevitable as folks get sick to death of waiting for Linux geeks to get their heads out of their arses. Letting application developers have free reign over the installed OS was stopped by Apple & Microsoft many years ago.

Is it a good use of resources having 700+ Linux distributions?

Redhat’s Atomic is probably a hint of things to come. http://red.ht/2ptRiQA

I could see something like this being becoming a workstation/mobile/embedded OS with refinement over time.

I’m not spruiking one company, just citing an example.

“Is it a good use of resources having 700+ Linux distributions?”

No it isn’t, but most of those distributions have a cadre of committed developers who spend much of their spare time developing it and a somewhat larger user base, many of whose members don’t want to use anything else. It’s a non-trivial problem to shrink that pool of 700+ distros, even though a lot of it to an outsider looks like needless duplication of effort.

For those who think Linux is 100% ready for the prime time, this is an actual error message I got when trying to run Opera in a Linux distro (Refracta);

“You need to make sure that

/usr/lib/i386-linux-gnu/opera/opera_sandbox is owned by root and has mode 4755.”

To be fair, silly verbose devvy errors are not exclusive to Linux. Take a look at my funny prompts article and you’ll see a plenty of those. That’s not the problem. The problem is that the system has no way of determining failures in a meaningful way and fixing them smoothly, again, as I’ve outlined in the “paved in bugs” article. Some light reading:

https://www.dedoimedo.com/computers/funny-stuff.html

http://www.ocsmag.com/2018/02/16/plasma-the-road-to-perfection-is-paved-with-bugs/

Dedoimedo